We setup an MQTT broker on Home Assistant (HA) so that our Lily ESP32 super remote can communicate with it. MQTT facilitates many different systems to interact and clients can communicate with each other as well as the broker.

Linux MQTT

RPi MQTT client installation is easy, I just install mosquitto-clients onto the RPi and I can send messages at the command line with mosquitto_pub.

If I use the same topic and message as Lily it has the same effect, for example in the example below I publish "button 3" to topic esp32/volume and HA arranges for the volume to be turned up on my Sony Amplifier.

This is great for testing but it is unlikely that I will use the linux command line much. However I would like to send MQTT messages using a browser, this allows me to send messages from phone/ipad/pc.

Browser MQTT

The Eclipse Paho MQTT javascript client appears to be a popular choice for a browser javascript MQTT client and Steves Internet Guide provides a very clear example to get a client working. The browser javascript client communicates with websockets on the MQTT broker and after some searching I found that in addition to the MQTT port 1883, HA supports websockets and the broker websocket listener is on port 1884.

Using Steves Internet Guide it was easy to setup javascript MQTTconnect/disconnect functions, associated with buttons on a simple webpage. The handlers required to deal with connection success / failure and incoming messages are also simple to implement so I can show the status of the connections and any messages received on a page. Finally I provided"volume up" and "volume down" buttons to demonstrate that I can now control my amplifier volume by sending MQTT commands to HA so that it can tell the Broadlink IR sender to send volume up/down commands to the amplifier.

The result is the very simple test webpage shown below. I can now easily implement MQTT functionality into other webpages which need to control devices in the home, in particular my home music server.

It is great that MQTT is a flexible general purpose communications protocol which will work for many different devices.

Webhooks

Early on in my HA investigations I setup webhooks so that I can trigger HA automations from a webpage. At the time I was more interested in voice control using Google Assistant and webhooks duplicate what is more readily achieved through voice.

However it occured to me that if I can use parameters / arguments with webhooks they make a realistic alternative to MQTT for communication with HA. Webhooks communicate directly with HA rather than needing to connect to the MQTT broker and sending a payload.

HA documentation indicates that it is possible:

Webhooks are implemented using POST requests which I can most easily provide using a linux curl command

I had some difficulty seeing the payload in HA until I added the -H parameter to specify JSON format. However once this was resolved I could write an automation which displays the payload trigger.json.payload as a HA notification when the message is received.

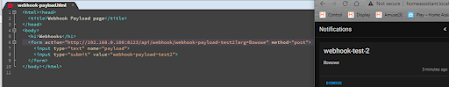

Of course I want to use webhook URLs in a webpage so I coded a form to send an input text box named payload. In this case the item containing the information is trigger.data.payload. Rather than sending an input text field I can add one or parameters to the webhook URL containing directives for HA. I added a parameter called arg to the webhook URL and could access it in a HA template as trigger.query.arg. The example below shows both the form item payload and the URL arg being sent from a webpage. The URL arg is displayed as a HA notification whilst the payload is sent to Google Nest mini to be read aloud.