I was pleased when I found that Home Assistant (HA) auto-discovers Jongos and allows you to play music on them. Unfortunately my enthusiam was dampened when I found that you can only specify a single track to play from Local Media or DLNA server, making it pretty useless. It is possible to specify / play radio channels using HA which may be of interest in future. However my desire is to be able to use Jongos for playlists in the same way I use MPD.

Technical solutions

I knew that HA allows you to use linux commands or shell scripts within HA scripts. So I thought there would be a potential solution. Googling gave me the idea that I could use curl commands to send requests to play music through Jongos. I investigated and it is indeed possible to contruct SOAP (Simple Object Access Protocol) requests to start music. The main problem is that Jongos use a variable port for communication which you need to determine using SSDP (Simple Socket Discovery Protocol), so some further work would be required to find port numbers. In addition SOAP requests are quite tedious to create and would require extra work.

In fact I have done the same work using the python library upnpclient to assist with the creation of requests and control of devices. My solution uses web sockets from a web page to send requests to a web socket daemon on my application server which then submits requests to Jongos using upnpclient. My second option was to send these web socket requests from a HA script using curl to my app server. Unfortunately the web socket protocol is not very similar to http and curl doesn't support it.

HA is written in python so it should be possible to run upnpclient python scripts directly on the HA server. However the HA python documentation says that you cant use python imports on the server and would perhaps need to use pyscript instead.

This may be the most effective solution eventually but for the moment I prefer to try using commands.

As the HA and application servers both run linux the easiest way to control the app server is using ssh commands, which is the approach I adopted

Remote Login

Normally, in a terminal you can sign in to a remote server and run a command or script using the syntax: ssh user@192.168.0.nnn <command line>.

When you do this the remote system asks for a password and may prompt you to add the server to your known hosts table. If we are automating our commands within HA, this isn't acceptible.

Storing keys to remove the need for passwords is something I do quite often but since HA uses containers the problem is not quite so straightforward. I found an excellent article written by HA "Command Central" Mike which addresses and resolves these problems, which are regularly experienced by HA users.

As HA uses containers you shouldn't store keys in the usual file /root/.ssh/id_rsa. HA software upgrades can erase previous contents of root. Instead you need to save the keys in a separate area /config/.ssh/id_rsa was used instead and include this location on your ssh command line.

When you use the command line in HA you are actually working in the SSH container, but when your commands are automated they are exectued in the HA OS environment, so to test your commands you need to jump into the appropriate container: docker exec -it homeassistant bash.

Finally, since our id file is not in the usual place we have to specify that the known_hosts file is in the same place otherwise we would have to answer the known_hosts question repeatedly.

Once we have done all this we can test remote command with some confidence. Here is the first use of a (quite long) ssh command string to run a simple hello.sh "hello world" script.

A working solution

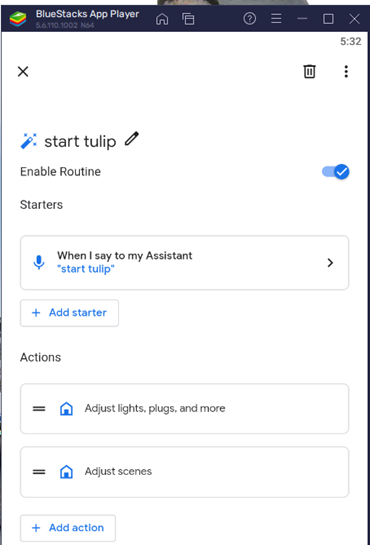

We can now proceed to setup a script to start our music.

First we add a shell_command to our configuration file

Thirdly we can add a dashboard card to play the jongo.